Study of Wowza load balacing schemes

Posted By : Oodles Admin | 23-Jun-2016

Many of us already know that load balancer is much in need when there is demand to scale live and on-demand streaming. Load Balancer is used to distribute client connections for the playback among edge servers. This helps in sustaining heavier traffic and enables dynamic resizing of deployment network. But, it do depends on the demand and costs. With the popularity of streaming media, it is expected that Load Balancer should become a standard feature in implementation with any quality system.

Let’s have a quick look at the commonly used Load Balancing schemes and when to use these schemes.

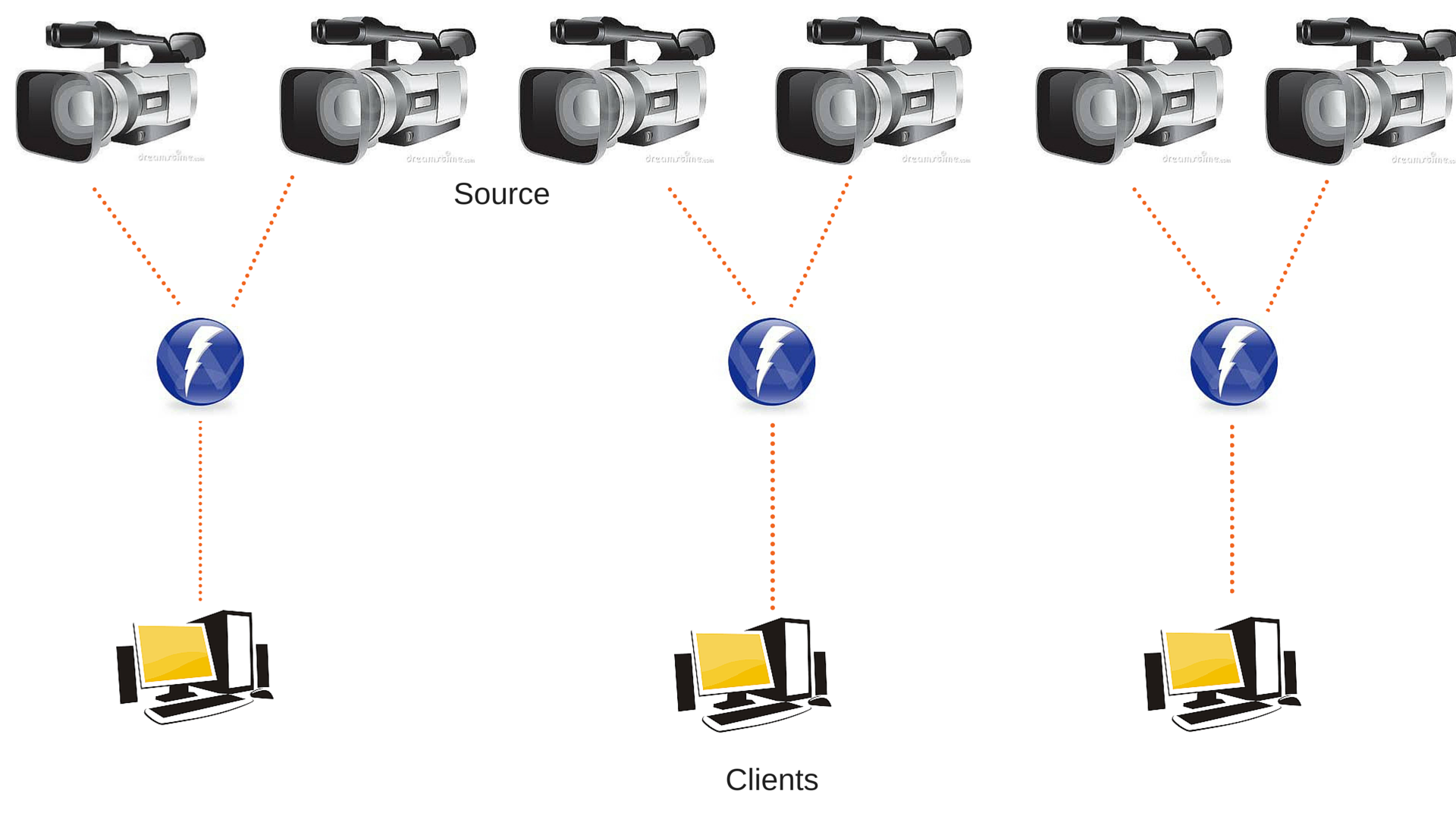

Parallel Schemes:

Parallel scheme is the most easy and direct form of load balancing. In this, same type of application can be made to run by multiple independent servers. Here, the load balancer role is to direct the traffic from client to the lesser occupied server. In order to have further optimization, a room can be created. In this room, a specific server will be associated for a group of clients with a similar purpose. So, this means all the participants in a room will connect to same server.

Parallel scheme load balancer can be used in 1-1, many-many and one-to-many applications. This scheme has various benefits as: The system without scalability in their design can even be easily implemented. Client. The servers can be easily added or removed to/from the deployment scheme and has low latency. While looking at the problematic area, this program do have some like: in a room, it can have limited number of concurrent clients, it is tough to estimate the room’s clients’ growth. This might result in server overload and there is no backup connection.

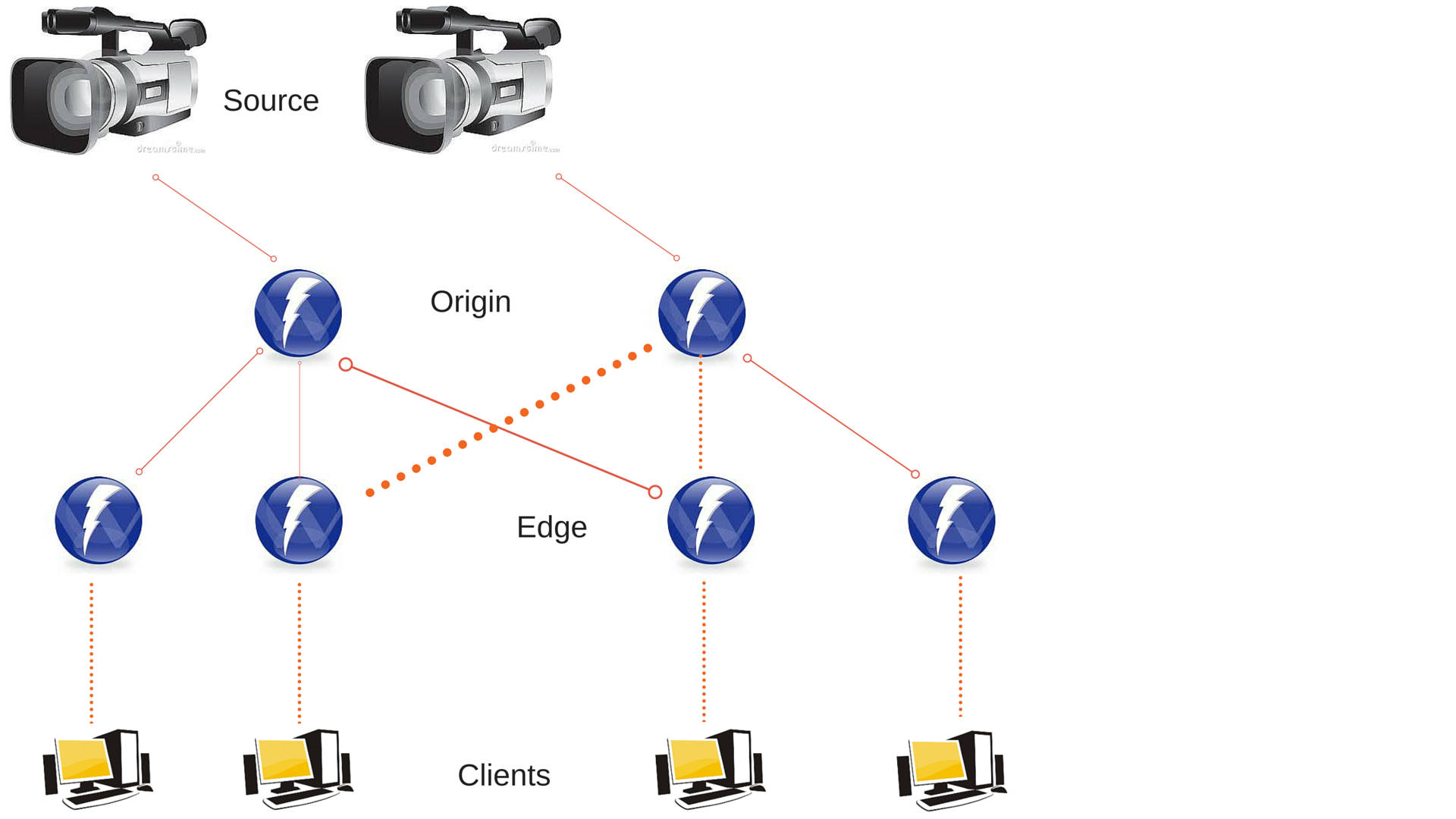

Edge-Origin:

It is one of highly tested solution. The original stream can be replicated on any number of edge servers. We can control the flow from the edge server so it is feasible to have dynamic connections. Under this scheme, an origin-based load balancer can be implemented. This load balancer can determine each edge node’s load and KPI when redirecting client to available/less loaded server.

Edge-origin load balancer can be used in one-to-many applications. Like parallel schemes, it is easy to configure and easy to add/remove edge servers. But, Unlike parallel scheme, such schemes has capability to serve much more number of clients as compared to parallel scheme. We have way to eliminate the server overload. In order to connect to any origin on demand, dynamic origin binding can be used while static origin binding can be used with many origins both for load balancing and backup against origin failure. Adding to it’s feature, low inter-server bandwidth footprint is used.

But, this can also be denied that if there is connection of client from different edges and origins then client-to-client RPC is not at all possible. Also, delay on delivery can be introduced during 1st time media stream consumption from an edge. This is due to the fact that buffer is being fully filled and connected to origin.

Layered Origin-Edges:

Adding to the edge-origin scheme, a variation introduces the notion of intermediate origin layer. It is one of the popular scheme as it allows huge scalability so highly in demand among CDN’s. By this, millions of concurrent clients becomes accessible. Here, sub-networks can be separately managed. By such architecture, a large number of independent networks acting as re-sellers for centrally generated content.

It is highly used for content distribution networks and one-to-many applications. Adding to the features comparing to rest of the schemes, it has ability to serve greater number of clients. To the deployment scheme, it is very easy to add an edge or edge-origin server along with easy configuration. But unfortunately, here also Client-Client RPC is not possible among the clients via different edges, origins and edge-origins. Removal of an edge-origin server might result in large sub-network. The number of introductory level results latency due to buffering. Larger delay is introduced by 1st time consumption from a lower level.

Star Balancing:

This load balancer is the most fascinating among rest of the mentioned schemes. You can establish dynamic interactions among servers and best QoS with flexibility. Also leads to access of any client’s stream through the fastest possible connection.

This scheme can implement 1-1, 1-many and many-to-many apps. Performs well under critical bandwidth. Star balancing has all nodes working as origin and edge and is very dynamic and extendable. It offers capability for geographical client-server pairing. Looking at the negative side is that it requires low latency connections among server. Like others, Client-client RPCs is not possible and larger inter-server bandwidth footprint. Comparing to other schemes, supports lower maximum traffic and is tough to handle complexity along with client-server pairing. It is not feasible to have static origin binding.

Cookies are important to the proper functioning of a site. To improve your experience, we use cookies to remember log-in details and provide secure log-in, collect statistics to optimize site functionality, and deliver content tailored to your interests. Click Agree and Proceed to accept cookies and go directly to the site or click on View Cookie Settings to see detailed descriptions of the types of cookies and choose whether to accept certain cookies while on the site.

About Author

Oodles Admin

Divya has more than 6 years of industrial experience in different domains – SAP EP, Search Quality Operations and Content Writing. She loves travelling across the world and also enjoys watching movies.